Beyond The Satellites

Today we go beyond all of the satellites, and into the mind of the computer.

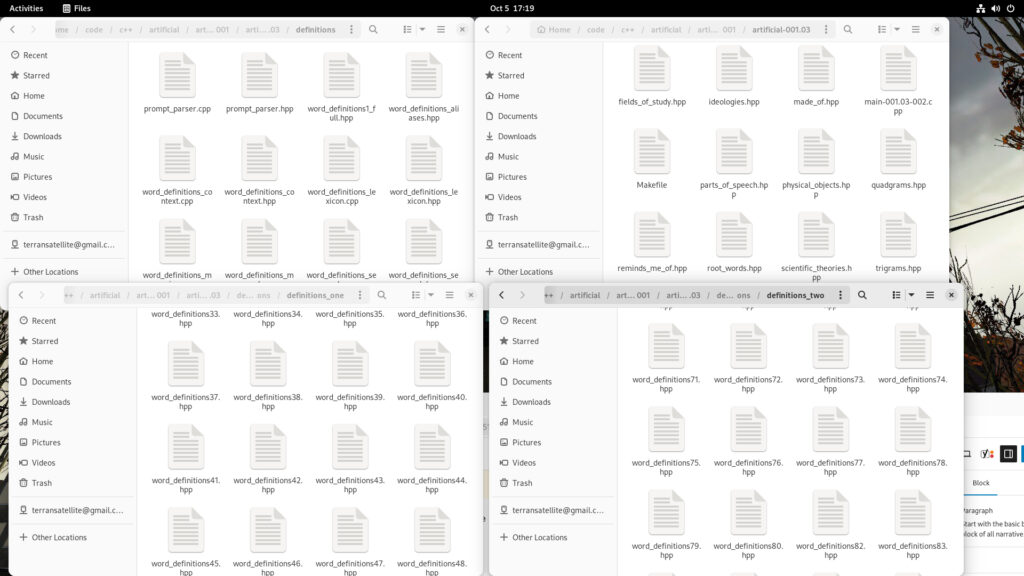

Here is a list of all of the files I have filled with definitions for words:

word_definitions1.hpp

word_definitions2.hpp

word_definitions3.hpp

word_definitions4.hpp

word_definitions5.hpp

word_definitions6.hpp

word_definitions7.hpp

word_definitions8.hpp

word_definitions9.hpp

word_definitions10.hpp

word_definitions11.hpp

word_definitions12.hpp

word_definitions13.hpp

word_definitions14.hpp

word_definitions15.hpp

word_definitions16.hpp

word_definitions17.hpp

word_definitions18.hpp

word_definitions19.hpp

word_definitions20.hpp

word_definitions21.hpp

word_definitions22.hpp

word_definitions23.hpp

word_definitions24.hpp

word_definitions25.hpp

word_definitions26.hpp

word_definitions27.hpp

word_definitions28.hpp

word_definitions29.hpp

word_definitions30.hpp

word_definitions31.hpp

word_definitions32.hpp

word_definitions33.hpp

word_definitions34.hpp

word_definitions35.hpp

word_definitions36.hpp

word_definitions37.hpp

word_definitions38.hpp

word_definitions39.hpp

word_definitions40.hpp

word_definitions41.hpp

word_definitions42.hpp

word_definitions43.hpp

word_definitions44.hpp

word_definitions45.hpp

word_definitions46.hpp

word_definitions47.hpp

word_definitions48.hpp

word_definitions49.hpp

word_definitions50.hpp

word_definitions51.hpp

word_definitions52.hpp

word_definitions53.hpp

word_definitions54.hpp

word_definitions55.hpp

word_definitions56.hpp

word_definitions57.hpp

word_definitions58.hpp

word_definitions59.hpp

word_definitions60.hpp

word_definitions61.hpp

word_definitions62.hpp

word_definitions63.hpp

word_definitions64.hpp

word_definitions65.hpp

word_definitions66.hpp

word_definitions67.hpp

word_definitions68.hpp

word_definitions69.hpp

word_definitions70.hpp

word_definitions71.hpp

word_definitions72.hpp

word_definitions73.hpp

word_definitions74.hpp

word_definitions75.hpp

word_definitions76.hpp

word_definitions77.hpp

word_definitions78.hpp

word_definitions79.hpp

word_definitions80.hpp

word_definitions81.hpp

word_definitions82.hpp

word_definitions83.hpp

word_definitions84.hpp

word_definitions85.hpp

word_definitions86.hpp

word_definitions87.hpp

word_definitions88.hpp

word_definitions89.hpp

word_definitions90.hpp

word_definitions91.hpp

word_definitions92.hpp

word_definitions93.hpp

word_definitions94.hpp

word_definitions95.hpp

word_definitions96.hpp

word_definitions97.hpp

word_definitions98.hpp

word_definitions99.hpp

word_definitions100.hpp

word_definitions101.hpp

word_definitions102.hppHere is one of those files:

// word_definitions7.hpp — DEFINITIONS (with ALL parameters inside each entry)

// Letter focus: “G”

#pragma once

#include <map>

#include <string>

#include <vector>

#include <algorithm>

// -----------------------------------------------------------------------------

// WordDefinition: lexical fields + FULL parameter set (defaults keep false/empty)

// Set only the fields that are TRUE / meaningful per entry.

// -----------------------------------------------------------------------------

struct WordDefinition {

// === Core lexical fields ===

std::string part_of_speech; // e.g., "noun", "verb", "abbr.", "proper noun"

std::string concise; // one-liner gloss

std::string expanded; // brief expanded definition

std::vector<std::string> synonyms; // near-synonyms

std::vector<std::string> antonyms; // conceptual opposites

std::vector<std::string> related; // collocations/associations

std::string register_label; // neutral/formal/technical/etc.

std::string domains; // subject domains

std::string etymology; // origin note

std::string example; // usage sentence

// === FULL parameter set (leave unset = false/empty) ===

// Core semantic context

std::string about{};

std::string description{};

double weight = 0.0; // [0..1]

double attention = 0.0; // [0..1]

std::vector<std::string> reminds_you_of{};

std::vector<std::string> tags{};

// Linguistic categories

bool is_proper_noun = false;

bool is_common_noun = false;

bool is_verb = false;

bool is_adjective = false;

bool is_adverb = false;

bool is_pronoun = false;

bool is_conjunction = false;

bool is_preposition = false;

bool is_determiner = false;

bool is_abbreviation = false;

bool is_acronym = false;

bool is_slang = false;

bool is_technical_term = false;

// Named-entity style flags

bool is_person_name = false;

bool is_place = false;

bool is_country = false;

bool is_region = false;

bool is_city = false;

bool is_organization = false;

bool is_product = false;

bool is_brand = false;

bool is_language = false;

bool is_unit = false;

bool is_numeric_literal = false;

// Computing/engineering

bool is_computer_term = false;

bool is_algorithm = false;

bool is_data_structure = false;

bool is_api = false;

bool is_protocol = false;

bool is_file_format = false;

bool is_library = false;

bool is_framework = false;

bool is_tool = false;

bool is_command = false;

bool is_programming_language = false;

bool is_compiler = false;

bool is_interpreter = false;

bool is_runtime = false;

bool is_build_system = false;

// Software form factors

bool is_application = false;

bool is_console_app = false;

bool is_gui_app = false;

bool is_mobile_app = false;

bool is_web_app = false;

bool is_service_daemon = false;

bool is_driver = false;

bool is_game = false;

bool is_3d_app = false;

// Systems / hardware

bool is_hardware = false;

bool is_cpu_related = false;

bool is_gpu_related = false;

bool is_memory_related = false;

bool is_storage_related = false;

bool is_networking = false;

// Security / identity

bool is_security = false;

bool is_encryption = false;

bool is_authentication = false;

bool is_authorization = false;

// Math/Science

bool is_math = false;

bool is_statistics = false;

// Natural-language & register

bool is_term_of_art = false;

bool is_colloquial = false;

// Narrative/context metadata

std::string who, what_for, who_for, when, where, why;

std::vector<std::string> examples;

std::vector<std::string> see_also;

std::string intention;

};

// -----------------------------------------------------------------------------

// Small factory to keep entries readable (pure C++)

// -----------------------------------------------------------------------------

inline WordDefinition WD(const char* pos, const char* concise, const char* expanded,

std::initializer_list<const char*> syn,

std::initializer_list<const char*> ant,

std::initializer_list<const char*> rel,

const char* reg, const char* dom,

const char* ety, const char* ex) {

WordDefinition w;

w.part_of_speech = pos;

w.concise = concise;

w.expanded = expanded;

w.synonyms = { syn };

w.antonyms = { ant };

w.related = { rel };

w.register_label = reg;

w.domains = dom;

w.etymology = ety;

w.example = ex;

return w;

}

template <class Fn>

inline WordDefinition WD(const char* pos, const char* concise, const char* expanded,

std::initializer_list<const char*> syn,

std::initializer_list<const char*> ant,

std::initializer_list<const char*> rel,

const char* reg, const char* dom,

const char* ety, const char* ex, Fn fn) {

WordDefinition w = WD(pos, concise, expanded, syn, ant, rel, reg, dom, ety, ex);

fn(w); // set flags/extra fields you care about

return w;

}

// -----------------------------------------------------------------------------

// DEFINITIONS — 20 “G” entries with inlined extra parameters where relevant

// -----------------------------------------------------------------------------

const std::map<std::string, WordDefinition> word_definitions7_map = {

{ "garbage collection", WD(

"noun",

"Automatic reclamation of unused memory.",

"Runtime process that identifies unreachable objects and frees their memory.",

{"gc"}, {"manual memory management"},

{"tracing","mark-and-sweep","generational"},

"technical","runtimes, memory","General CS term.",

"Tune garbage collection to reduce pause times.",

[](auto& w){

w.about="memory management"; w.weight=0.66; w.attention=0.68;

w.reminds_you_of={"heap","pause","mark/sweep","generational"};

w.tags={"runtime","memory"};

w.is_computer_term = w.is_runtime = w.is_memory_related = w.is_technical_term = true;

w.is_abbreviation = true; // “GC”

})

},

{ "garbage collector", WD(

"noun",

"Component that performs garbage collection.",

"Runtime subsystem that detects dead objects and reclaims their memory.",

{"gc"}, {"manual allocator"},

{"heap","allocator","write barrier"},

"technical","runtimes, memory","General CS term.",

"The garbage collector runs concurrently with mutators.",

[](auto& w){

w.about="runtime component"; w.weight=0.64; w.attention=0.66;

w.reminds_you_of={"mutator","heap","barrier"};

w.tags={"runtime","memory"};

w.is_computer_term = w.is_runtime = w.is_memory_related = w.is_technical_term = true;

w.is_abbreviation = true;

})

},

{ "gateway (network)", WD(

"noun",

"Node that routes traffic between networks.",

"A router or device that connects different networks, often enforcing policies.",

{"router","edge"}, {"isolated host"},

{"nat","firewall","default route"},

"neutral","networking","Networking term.",

"Configure the default gateway on the host.",

[](auto& w){

w.about="routing"; w.weight=0.58; w.attention=0.58;

w.reminds_you_of={"router","nat","egress"};

w.tags={"net","routing"};

w.is_computer_term = w.is_networking = w.is_technical_term = true;

})

},

{ "gdb", WD(

"abbr.",

"GNU Debugger.",

"Command-line debugger for programs, supporting breakpoints, stepping, and inspection.",

{"debugger"}, {"printf-only debugging"},

{"breakpoint","core dump","symbols"},

"neutral","tooling, runtimes","GNU project.",

"Attach gdb to inspect the crashing process.",

[](auto& w){

w.about="debugging"; w.weight=0.60; w.attention=0.61;

w.reminds_you_of={"breakpoints","bt","core"};

w.tags={"tooling","debug"};

w.is_computer_term = w.is_tool = w.is_console_app = w.is_technical_term = true;

w.is_abbreviation = true;

})

},

{ "generator (programming)", WD(

"noun",

"Function that yields a sequence lazily.",

"A callable that produces values on demand, often preserving state between yields.",

{"coroutine (related)"}, {"materialized list only"},

{"yield","iterator","lazy"},

"technical","languages, runtimes","Language/runtime concept.",

"Use a generator to stream large results.",

[](auto& w){

w.about="lazy evaluation"; w.weight=0.57; w.attention=0.57;

w.reminds_you_of={"yield","iterator","coroutine"};

w.tags={"lang","runtime"};

w.is_computer_term = w.is_technical_term = true;

})

},

{ "generic programming", WD(

"noun",

"Writing algorithms and data structures parameterized by types.",

"Technique emphasizing abstraction over types (e.g., templates, generics) for reuse and performance.",

{"parametric polymorphism"}, {"monomorphic only"},

{"template","trait","concepts"},

"technical","languages, design","C++/ML heritage.",

"Use generic programming to avoid code duplication.",

[](auto& w){

w.about="abstraction"; w.weight=0.58; w.attention=0.58;

w.reminds_you_of={"templates","traits","concepts"};

w.tags={"lang","design"};

w.is_computer_term = w.is_technical_term = true;

})

},

{ "genetic algorithm", WD(

"noun",

"Optimization heuristic inspired by natural selection.",

"Population-based search using selection, crossover, and mutation to evolve solutions.",

{"evolutionary algorithm"}, {"exhaustive search"},

{"fitness","mutation","crossover"},

"neutral","algorithms, optimization","AI/optimization field.",

"A genetic algorithm found a near-optimal schedule.",

[](auto& w){

w.about="optimization"; w.weight=0.56; w.attention=0.56;

w.reminds_you_of={"fitness","mutation","population"};

w.tags={"ai","algo"};

w.is_computer_term = w.is_algorithm = w.is_technical_term = true;

})

},

{ "git", WD(

"proper noun",

"Distributed version control system.",

"Tool that tracks changes with commits, branches, and merges across repositories.",

{"dvcs"}, {"centralized-only VCS"},

{"commit","branch","merge"},

"neutral","version control, tooling","Created by Linus Torvalds.",

"Push the branch to the remote in git.",

[](auto& w){

w.about="version control"; w.weight=0.63; w.attention=0.65;

w.reminds_you_of={"commit","rebase","merge"};

w.tags={"vcs","tooling"};

w.is_computer_term = w.is_tool = w.is_technical_term = true;

w.is_proper_noun = true;

})

},

{ "git commit", WD(

"noun / verb",

"Record of changes in a repository with metadata.",

"Creates an immutable snapshot with author, message, and parent pointers.",

{"revision"}, {"untracked change"},

{"diff","sha","message"},

"neutral","version control","Git term.",

"Write a descriptive message for the git commit.",

[](auto& w){

w.about="change unit"; w.weight=0.56; w.attention=0.55;

w.reminds_you_of={"diff","sha1","history"};

w.tags={"vcs","git"};

w.is_computer_term = w.is_technical_term = true;

})

},

{ "git merge", WD(

"noun / verb",

"Combine histories from different branches.",

"Integrates changes, creating a merge commit if necessary.",

{"integrate"}, {"rebase (contrast)"},

{"conflict","fast-forward"},

"neutral","version control","Git term.",

"Resolve conflicts during the git merge.",

[](auto& w){

w.about="history integration"; w.weight=0.56; w.attention=0.56;

w.reminds_you_of={"conflict","ff","three-way"};

w.tags={"vcs","git"};

w.is_computer_term = w.is_technical_term = true;

})

},

{ "git rebase", WD(

"noun / verb",

"Replay commits onto a new base.",

"Rewrites history by applying changes on top of another branch.",

{"history rewrite"}, {"merge commit"},

{"interactive","squash","fixup"},

"neutral","version control","Git term.",

"Use git rebase -i to clean up commits.",

[](auto& w){

w.about="history rewriting"; w.weight=0.56; w.attention=0.57;

w.reminds_you_of={"squash","fixup","interactive"};

w.tags={"vcs","git"};

w.is_computer_term = w.is_technical_term = true;

})

},

{ "glsl", WD(

"abbr.",

"OpenGL Shading Language.",

"C-like language for programming GPU shaders in the OpenGL pipeline.",

{"shader language"}, {"fixed-function pipeline"},

{"vertex","fragment","uniform"},

"technical","graphics, gpu","Khronos ecosystem.",

"Write the fragment shader in GLSL.",

[](auto& w){

w.about="shading language"; w.weight=0.58; w.attention=0.60;

w.reminds_you_of={"shader","OpenGL","uniforms"};

w.tags={"graphics","gpu"};

w.is_computer_term = w.is_programming_language = w.is_gpu_related = w.is_technical_term = true;

w.is_abbreviation = true;

})

},

{ "gpu (graphics processing unit)", WD(

"abbr. / noun",

"Highly parallel processor specialized for graphics/compute.",

"Hardware device optimized for SIMD/SIMT workloads, widely used for rendering and general-purpose acceleration.",

{"graphics card"}, {"cpu-only compute"},

{"cuda","vulkan","opengl"},

"technical","hardware, compute","Acronym.",

"Run the model on the GPU for speedup.",

[](auto& w){

w.about="parallel hardware"; w.weight=0.66; w.attention=0.68;

w.reminds_you_of={"cuda","shader","tensor cores"};

w.tags={"hardware","accel"};

w.is_computer_term = w.is_hardware = w.is_gpu_related = w.is_technical_term = true;

w.is_abbreviation = true;

})

},

{ "gui (graphical user interface)", WD(

"abbr. / noun",

"Visual interface with windows, icons, and controls.",

"Interaction style using graphics rather than exclusively text-based commands.",

{"graphical interface"}, {"cli"},

{"widget","window","event loop"},

"neutral","ux, applications","Acronym.",

"Provide a GUI for non-technical users.",

[](auto& w){

w.about="user interface"; w.weight=0.56; w.attention=0.55;

w.reminds_you_of={"widgets","windows","pointer"};

w.tags={"ux","app"};

w.is_computer_term = w.is_application = w.is_gui_app = w.is_technical_term = true;

w.is_abbreviation = true;

})

},

{ "graph (data structure)", WD(

"noun",

"Set of vertices connected by edges.",

"Abstract structure modeling relationships; can be directed/undirected, weighted/unweighted.",

{"network (math)"}, {"isolated set"},

{"vertex","edge","adjacency"},

"technical","data structures, algorithms","Math/CS term.",

"Model dependencies as a graph.",

[](auto& w){

w.about="relations"; w.weight=0.62; w.attention=0.61;

w.reminds_you_of={"nodes","edges","adjacency"};

w.tags={"datastruct","algo"};

w.is_computer_term = w.is_data_structure = w.is_math = w.is_technical_term = true;

})

},

{ "graph database", WD(

"noun",

"Database that stores data as nodes and edges.",

"Optimized for traversing relationships with graph queries (e.g., Gremlin, Cypher).",

{"graph db"}, {"strict relational-only"},

{"cypher","gremlin","triple store"},

"neutral","databases, storage","Modern DB type.",

"Use a graph database for relationship-heavy data.",

[](auto& w){

w.about="data storage"; w.weight=0.57; w.attention=0.57;

w.reminds_you_of={"nodes","edges","traversal"};

w.tags={"db","graph"};

w.is_computer_term = w.is_storage_related = w.is_technical_term = true;

})

},

{ "graph traversal", WD(

"noun",

"Systematic visit of graph nodes/edges.",

"Procedures like BFS/DFS to explore or query graphs.",

{"walk"}, {"random hop"},

{"bfs","dfs","path"},

"technical","algorithms, graphs","Core algo concept.",

"Perform a BFS graph traversal to find shortest paths in unweighted graphs.",

[](auto& w){

w.about="graph algorithms"; w.weight=0.58; w.attention=0.58;

w.reminds_you_of={"bfs","dfs","paths"};

w.tags={"graph","algo"};

w.is_computer_term = w.is_algorithm = w.is_technical_term = true;

w.is_abbreviation = false;

})

},

{ "greedy algorithm", WD(

"noun",

"Algorithm that makes the locally optimal choice at each step.",

"Heuristic that builds a solution piece by piece, hoping local optima lead to a global optimum.",

{"myopic heuristic"}, {"dynamic programming"},

{"optimal","approximation","local choice"},

"neutral","algorithms","Classic algo strategy.",

"Use a greedy algorithm for interval scheduling.",

[](auto& w){

w.about="algorithmic strategy"; w.weight=0.56; w.attention=0.55;

w.reminds_you_of={"local optimum","approximation","exchange argument"};

w.tags={"algo","heuristic"};

w.is_computer_term = w.is_algorithm = w.is_technical_term = true;

})

},

{ "grep", WD(

"noun / verb",

"Search for patterns in text using regular expressions.",

"Unix utility and family of tools for line-based regex searching.",

{"search"}, {"manual scanning"},

{"regex","ripgrep","ack"},

"neutral","tooling, text processing","From ed command g/re/p.",

"Grep the logs for error patterns.",

[](auto& w){

w.about="text search"; w.weight=0.58; w.attention=0.60;

w.reminds_you_of={"regex","ripgrep","pipe"};

w.tags={"cli","search"};

w.is_computer_term = w.is_tool = w.is_command = w.is_console_app = w.is_technical_term = true;

})

},

{ "gzip", WD(

"noun / verb",

"Popular lossless compression format and tool.",

"DEFLATE-based compressor used widely for files and HTTP content-encoding.",

{"deflate"}, {"store uncompressed"},

{"tar","http","content-encoding"},

"neutral","compression, networking","GNU tool/format.",

"Serve static assets with gzip encoding.",

[](auto& w){

w.about="compression"; w.weight=0.58; w.attention=0.59;

w.reminds_you_of={"deflate","tar","http"};

w.tags={"storage","net"};

w.is_computer_term = w.is_file_format = w.is_tool = w.is_technical_term = true;

})

},

}; // end word_definitions7_map

This is how you build an artificial intelligence, and it’s about all that you need to know besides the names of the individual parameters, which i’ll give you next.

Here's some of the parameters:

{ "type system", ultra::UltraPlus{

.purpose="prevent invalid programs",

.aims={"soundness"},

.approach="rules on values/ops; proofs/constraints",

.essence="discipline over types"

}},

here's the rest:

{ "transactional outbox", defs::WordPatch{

.intention = std::optional<std::string>("avoid dual-write inconsistency by atomically recording events"),

.attention = 0.59, .weight = 0.58,

.add_tags = std::optional<std::vector<std::string>>({"cdc","relay","idempotency"})

}},

w.intention = "constrain programs so invalid operations are rejected";

w.about = "formal discipline over values and operations";

w.weight=0.60; w.attention=0.61;

w.reminds_you_of={"polymorphism","effects","proofs"};

w.tags={"types","theory","lang"};

w.is_technical_term = true;

w.is_math = true;

})

the rest of the parameters are inside of artificial-001.03.cpp, or main.cpp

#include <iostream>

#include <vector>

#include <string>

#include <sstream>

#include <algorithm>

#include <map>

// --- THE COMPLETE KNOWLEDGE BASE ---

// Including all 12 of the data files we have built together.

#include "parts_of_speech.hpp"

#include "root_words.hpp"

#include "physical_objects.hpp"

#include "abstract_concepts.hpp"

#include "made_of.hpp"

#include "type_to_noun.hpp"

#include "ideologies.hpp"

#include "fields_of_study.hpp"

#include "scientific_theories.hpp"

#include "digrams.hpp"

#include "trigrams.hpp"

#include "quadgrams.hpp"

namespace words_and_letters {

// This structure holds the complete analysis for a single word (token).

struct AnalyzedToken {

std::string word;

// Layer 1: Fundamental Grammatical Type

enum PartOfSpeech {

POS_UNKNOWN, NOUN, VERB, ADJECTIVE, ADVERB, PREPOSITION, CONJUNCTION, PRONOUN, DETERMINER

} part_of_speech = POS_UNKNOWN;

// Layer 2: Specific Semantic Category

enum TokenType {

TYPE_UNKNOWN, PHYSICAL_OBJECT, ABSTRACT_CONCEPT, MATERIAL, IDEOLOGY, FIELD_OF_STUDY, SCIENTIFIC_THEORY

} type = TYPE_UNKNOWN;

// Layer 3: Relational Knowledge

std::string is_a_type_of = "";

};

class word_and_prompt {

private:

std::vector<AnalyzedToken> analyzed_prompt;

// Helper function to find the general noun for a specific type using our map.

std::string find_noun_for_type(const std::string& word_to_check) {

for (const auto& pair : noun_to_types_map) {

const std::string& general_noun = pair.first;

const std::vector<std::string>& types_list = pair.second;

if (std::find(types_list.begin(), types_list.end(), word_to_check) != types_list.end()) {

return general_noun; // We found it!

}

}

return ""; // Not found

}

public:

void call_set_the_prompt(const std::vector<std::string>& prompt_input) {

analyzed_prompt.clear();

for (const auto& sentence : prompt_input) {

std::stringstream ss(sentence);

std::string word;

while (ss >> word) {

if (!word.empty() && (word.back() == '.' || word.back() == ',')) { word.pop_back(); }

analyzed_prompt.push_back({word});

}

}

std::cout << "Prompt has been received and tokenized.\n" << std::endl;

}

void parse_prompt() {

std::cout << "--- BEGINNING FULL ANALYSIS ---" << std::endl;

for (auto& token : analyzed_prompt) {

// --- STAGE 1: PART-OF-SPEECH TAGGING ---

if (std::find(pos_nouns.begin(), pos_nouns.end(), token.word) != pos_nouns.end()) token.part_of_speech = AnalyzedToken::NOUN;

else if (std::find(pos_verbs.begin(), pos_verbs.end(), token.word) != pos_verbs.end()) token.part_of_speech = AnalyzedToken::VERB;

else if (std::find(pos_adjectives.begin(), pos_adjectives.end(), token.word) != pos_adjectives.end()) token.part_of_speech = AnalyzedToken::ADJECTIVE;

else if (std::find(pos_adverbs.begin(), pos_adverbs.end(), token.word) != pos_adverbs.end()) token.part_of_speech = AnalyzedToken::ADVERB;

else if (std::find(pos_prepositions.begin(), pos_prepositions.end(), token.word) != pos_prepositions.end()) token.part_of_speech = AnalyzedToken::PREPOSITION;

else if (std::find(pos_conjunctions.begin(), pos_conjunctions.end(), token.word) != pos_conjunctions.end()) token.part_of_speech = AnalyzedToken::CONJUNCTION;

else if (std::find(pos_pronouns.begin(), pos_pronouns.end(), token.word) != pos_pronouns.end()) token.part_of_speech = AnalyzedToken::PRONOUN;

else if (std::find(pos_determiners.begin(), pos_determiners.end(), token.word) != pos_determiners.end()) token.part_of_speech = AnalyzedToken::DETERMINER;

// --- STAGE 2: SEMANTIC & TAXONOMICAL ANALYSIS ---

if (token.part_of_speech == AnalyzedToken::NOUN) {

std::string noun = find_noun_for_type(token.word);

if (!noun.empty()) {

token.is_a_type_of = noun;

}

if (std::find(scientific_theories.begin(), scientific_theories.end(), token.word) != scientific_theories.end()) token.type = AnalyzedToken::SCIENTIFIC_THEORY;

else if (std::find(ideologies.begin(), ideologies.end(), token.word) != ideologies.end()) token.type = AnalyzedToken::IDEOLOGY;

else if (std::find(fields_of_study.begin(), fields_of_study.end(), token.word) != fields_of_study.end()) token.type = AnalyzedToken::FIELD_OF_STUDY;

else if (std::find(physical_objects.begin(), physical_objects.end(), token.word) != physical_objects.end()) token.type = AnalyzedToken::PHYSICAL_OBJECT;

else if (std::find(abstract_concepts.begin(), abstract_concepts.end(), token.word) != abstract_concepts.end()) token.type = AnalyzedToken::ABSTRACT_CONCEPT;

else if (std::find(materials.begin(), materials.end(), token.word) != materials.end()) token.type = AnalyzedToken::MATERIAL;

}

}

std::cout << "--- ANALYSIS COMPLETE ---\n" << std::endl;

}

void display_analysis() const {

std::cout << "--- DISPLAYING ANALYSIS RESULTS ---" << std::endl;

for (const auto& token : analyzed_prompt) {

std::cout << "Word: '" << token.word << "'" << std::endl;

std::cout << " - Part of Speech: ";

switch (token.part_of_speech) {

case AnalyzedToken::NOUN: std::cout << "NOUN"; break;

case AnalyzedToken::VERB: std::cout << "VERB"; break;

case AnalyzedToken::ADJECTIVE: std::cout << "ADJECTIVE"; break;

case AnalyzedToken::ADVERB: std::cout << "ADVERB"; break;

case AnalyzedToken::PREPOSITION: std::cout << "PREPOSITION"; break;

case AnalyzedToken::CONJUNCTION: std::cout << "CONJUNCTION"; break;

case AnalyzedToken::PRONOUN: std::cout << "PRONOUN"; break;

case AnalyzedToken::DETERMINER: std::cout << "DETERMINER"; break;

default: std::cout << "UNKNOWN"; break;

}

std::cout << std::endl;

if (token.type != AnalyzedToken::TYPE_UNKNOWN) {

std::cout << " - Semantic Type: ";

switch (token.type) {

case AnalyzedToken::PHYSICAL_OBJECT: std::cout << "PHYSICAL_OBJECT"; break;

case AnalyzedToken::ABSTRACT_CONCEPT: std::cout << "ABSTRACT_CONCEPT"; break;

case AnalyzedToken::MATERIAL: std::cout << "MATERIAL"; break;

case AnalyzedToken::IDEOLOGY: std::cout << "IDEOLOGY"; break;

case AnalyzedToken::FIELD_OF_STUDY: std::cout << "FIELD_OF_STUDY"; break;

case AnalyzedToken::SCIENTIFIC_THEORY: std::cout << "SCIENTIFIC_THEORY"; break;

default: break;

}

std::cout << std::endl;

}

if (!token.is_a_type_of.empty()) {

std::cout << " - Is a Type Of: '" << token.is_a_type_of << "'" << std::endl;

}

std::cout << std::endl;

}

std::cout << "------------------------------------" << std::endl;

}

};

}

int main()

{

words_and_letters::word_and_prompt the_parser;

std::vector<std::string> the_prompt = {

"the", "brave", "poodle", "studies", "physics", "and", "democracy", "with", "great", "love"

};

the_parser.call_set_the_prompt(the_prompt);

the_parser.parse_prompt();

the_parser.display_analysis();

return 0;

}here’s a picture of all of the folders that make up the artificial intelligence,

There’s methods for searching, there’s methods for parts of speech, whatever